Client

Large NY-based life insurance and investment company

Goal

Modernize technology systems in commercial real estate functions to reduce bottlenecks, response times and vulnerabilities

Tools and Technologies

.NET 8, Angular 17+, Docker, Kubernetes, GitHub, AWS

Business Challenge

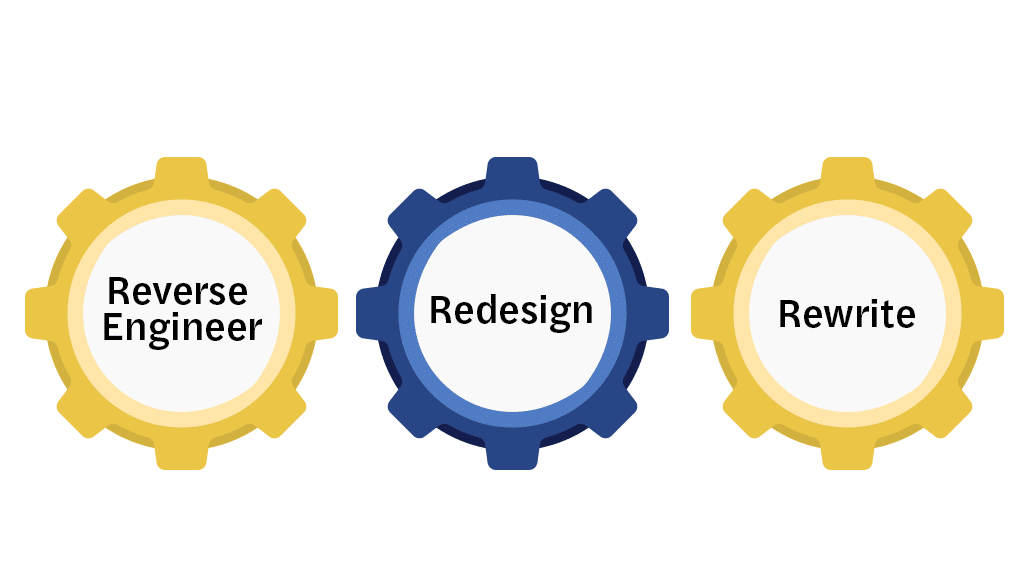

The insurer’s commercial real estate mortgage unit required upgrades to the technology systems supporting its loan processing and cash management capabilities. Reliance on .NET Framework 4.8 and Angular 9 posed significant limitations, including restricted agility, difficulty adopting microservices, and challenges attracting top engineering talent. The existing monolithic, non-cloud-native architecture led to performance bottlenecks and slow response times. Outdated protocols created security vulnerabilities, while limited DevOps integration and complex debugging made cloud migration costly and inefficient.

Solution

- Migrated the technology stack to .NET 8 and Angular 17+ for better cross-platform compatibility and long-term performance

- Adopted Docker and Kubernetes to enable scalable, cloud-native deployments on AWS

- Implemented CI/CD automation using GitHub Actions for faster and more secure release cycles

- Refactored legacy application into a modular monolith and microservices for agility and maintainability

Outcomes

- Improved application performance and responsiveness delivered faster, smoother user experiences

- Boosted developer productivity and team collaboration and streamlined testing and onboarding

- Strengthened security and compliance with up-to-date platforms and better integration with security tools

- Enabled scalable, reliable, simplified deployments using containerization and orchestration

- Reduced long-term maintenance effort and costs through clean, modular code and better monitoring

Our experts can help you find the right solutions to meet your needs.

Eagle Access Data Platform Transforms Accounting

Client

Large NY-based life insurance and investment company

Goal

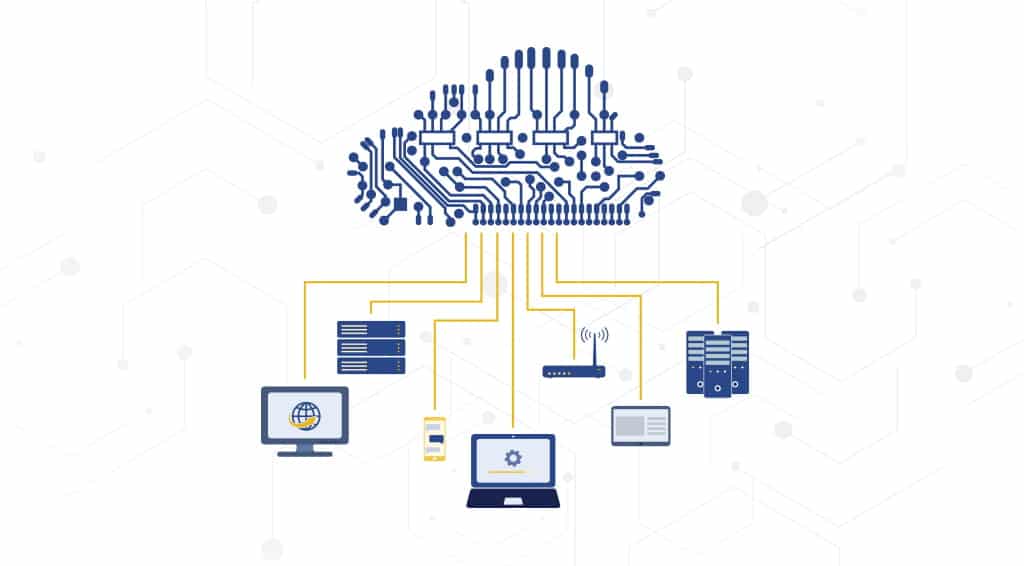

Consolidate multiple accounting systems into a centralized, reliable data warehouse to improve reporting and decision-making

Tools and Technologies

BNY Eagle Access, Python, Oracle, AWS EC2 and S3, SQL Server, Jira, Stonebranch

Business Challenge

The insurer faced growing inefficiencies due to siloed accounting systems that lacked integration, consistency, and scalability. Reporting processes were time-consuming, error-prone, and lacked real-time visibility—hindering timely business and investment decisions. A centralized solution was needed to ingest and unify data from disparate systems like SAP GL, Singularity, and Loan Management into a single, trusted platform to support strategic financial insights and reduce operational complexity.

Solution

- Built a centralized accounting data warehouse using the Eagle Access secure private cloud environment

- Ingested and standardized data from SAP GL, Singularity, and Loan Management systems

- Used AWS EC2, S3, and Stonebranch Universal Automation Controller for cloud infrastructure and job orchestration

- Enabled real-time reporting via Tableau integration and migration of legacy dashboards

- Improved data accuracy and consistency through robust validation and automation

Outcomes

- Created a unified source of truth for all accounting data

- Enabled faster, more accurate reporting and analytics, improving business and investment decision-making

- Reduced data silos and improved accessibility across systems

- Minimized infrastructure complexity and operational risk with secure private cloud hosting

- Enhanced efficiency through automated data processing and orchestration

Our experts can help you find the right solutions to meet your needs.

How Generative AI adds business value

Generative AI can address problems that traditional approaches cannot solve,

improve customer and employee satisfaction, and boost productivity and quality.

How Generative AI adds business value

Generative AI can address problems that traditional approaches cannot solve,

improve customer and employee satisfaction, and boost productivity and quality.

Generative AI Experience

Document Intelligence

and Automation

Leverage AI-driven Q&A, document summarization, and automated data extraction to streamline the processing of large volumes of complex documents. Our solutions simplify access to critical information, enabling faster decision-making, enhanced compliance, and reduced manual effort across information-heavy documents such as policies, processes, procedures and reports

Configurable, Enterprise-ready AI Platforms

Next-generation

Conversational Assistants

Natural language interfaces across documents, data and APIs unify access to structured and unstructured information. From wealth management, insurance and commercial lending to market risk and AML, our AI-driven Virtual Assistants enable seamless access to critical insights and streamline interactions by summarizing data, automating report generation, and answering complex questions with high accuracy

AI Assistant for Software Engineering

Coding assistants are great, but what about the rest of the engineering lifecycle? An extensible Gen AI-powered software engineering toolkit, featuring browser and IDE extensions, can seamlessly integrate with repositories like GitHub and JIRA. Designed to enhance overlooked areas of the software development lifecycle, it streamlines requirement validation, test case generation, and standards conformance, improving experience, and boosting productivity and quality

Agentic AI

From business use cases to software engineering, agentic AI has significant potential to dynamically leverage Gen AI and related technologies, such as MCP and A2A, for planning and automating complex activities using multiple agents, APIs, and related resources and prompts. While initial agentic AI use cases were based on static workflows, recent frameworks and models enable handling of more complex and diverse sets of use cases

Value We Provide

Scalable Approach to

Generative AI Adoption

Our structured approach to adoption often starts with Proof of Concept in a sandbox environment, evolving to a pilot, and ultimately scaling into full production-grade solutions. We thus enable organizations to scale their use of Generative AI, empowering them to address a wide range of use cases while maximizing ROI.

Accelerating Business Impact

and Improving Decision Intelligence

We streamline workflows by integrating AI into document processing, risk analysis, compliance reporting, and customer support. Our automation solutions reduce manual effort, enhance accuracy, and enable faster decision-making across business functions.

Deep Expertise in

Enterprise AI Transformation

With extensive experience in implementing AI-driven solutions, we enable enterprises to progress beyond isolated use cases and establish scalable, secure, and responsible AI adoption. Our solutions drive efficiency across knowledge management, automation, and decision-making while ensuring seamless integration with enterprise systems.

Client Success Stories

Tools & Technologies

How Gen AI can enhance software engineering

Upcoming Events

Contact Us

Thank you for getting in touch

We appreciate you contacting us. We will get back in touch with you soon.

Have a great day.

Meet our team at the AWS Summit in NYC July 2025

An Iris team with extensive technology and domain experience is attending the AWS Summit on July 16, 2025, at the Jacob Javits Convention Center in New York City. Our professionals are excited to discuss the latest innovations and operational successes in Cloud Engineering, Application and Infrastructure Modernization, DevOps, Generative AI and Data Science & Analytics.

Contact our team at the AWS Summit or links below to learn more about our approach and the advanced technology solutions that are foundational to digital transformation. Outcomes from leveraging emerging tech include enhanced security, scalability, reliability, productivity, cost-efficiency, customer experience, decision-making, and compliance, which support the business competitiveness and growth journeys of enterprises in nearly every industry.

Connect with our team at AWS Summit:

- Prem Swarup – Vice President, Head of Data & Analytics Practice

- Ryan Fagan – Senior Client Partner, Banking & Financial Services

- Abhineet Jha – Head of Insurance IT Services

- Venkat Laksh – Head of Insurance IT Sales

- Glenn DeGeorge – Client Partner, Enterprise Services

- Samrat Ghosh – Client Partner, Enterprise Services

- Srikanth Jangam – Senior Client Partner, EdTech & Media

- Siddharth Garg – Client Partner, Enterprise Services

- Mayank Khanna – Delivery Partner, Enterprise Services

- Parijat Sharma – Client Partner, Enterprise Services

For more insights, read about our Cloud, Data & Analytics, and Generative AI services and success stories, as well as our perspective papers on Cloud Migration Challenges and Solutions, How Gen AI Can Transform Software Engineering, and Succeeding in ML Ops Journeys.

Contact

Our experts can help you find the right solutions to meet your needs.

Get in touchEvent partner at Open Banking Expo Canada 2025

We are proud to be an event partner at Open Banking Expo Canada 2025, contributing to key conversations on how API ecosystems are reshaping the future of financial services. Connect with our team at the expo on June 17, 2025, at the Metro Toronto Convention Centre in Ontario, Canada.

Iris Software is focused on bringing value and transformative technology to leading global banks and financial services (BFS) providers, including those in the personal banking and payments sectors.

Subramanian Viswanathan, Associate Vice President, Financial Services Practice at Iris Software, will moderate a Powerhouse Debate titled ‘Open Banking & API Banking: Unlocking the future of financial services’ with leaders from BMO, TD, RBC, and Scotiabank. “The future of financial services lies in building connected ecosystems where data moves securely, and value moves instantly.” - Subramanian Viswanathan.

For decades, Iris has accelerated the digital transformation journeys of major global and Canadian banks and financial services and payments enterprises. Connect with our experts to learn how our solutions in AI / ML, Application Modernization, Automation, Cloud, Data Science, DevOps, Enterprise Analytics, Integrations, and Quality Engineering improved clients’ data quality, reliability and scalability; platform and systems efficiency; user interfaces and experiences; insight extraction and decision-making; and regulatory compliance.

For more information on the benefits of our future-ready solutions, visit Iris Software Banking and Financial Services.

Contact

Our experts can help you find the right solutions to meet your needs.

Get in touchJoin us for Reuters Future of Insurance USA 2025

Reuters Events hosts this year’s annual Future of Insurance USA Summit on June 12-13, 2025, in Chicago, Illinois. The theme is Rebuild Trust at the Top, with a focus on the value that AI-powered tools, customer-centric products, and strategic collaborations can bring to insurers in all sectors of the industry. The emphasis on these topics are driving 500+ insurance leaders, innovators, and top technology providers like Iris Software to participate in the Summit.

Abhineet Jha, Senior Client Partner, will represent our Insurtech Team at the event.

Four main topic areas will be addressed in the presentations and networking discussions in support of the theme of the 2025 FOI Summit:

- Technology Innovation and Transformation, focusing on AI and Data Analytics

- Risk Management, including cyber threats and regulatory compliance

- Customer Experience, developing seamless UX, personalization, and enhanced communications

- Industry Collaboration, building strategic partnerships

These areas are a top focus of the Insurtech services and solutions that Iris successfully provides to leading carriers in property, casualty, specialty, and life and annuity insurance.

Discuss the evolving insurance landscape, Insurtech innovation, and your technology priorities with Abhineet Jha, our seasoned insurance expert, at Reuters Future of Insurance USA 2025. Learn how insurers are partnering with Iris and applying our solutions in AI/ML, Application Modernization, Automation, Cloud, Data Science, Enterprise Analytics, and Integrations to advance their digital transformation goals.

You can also contact our team and read more about our InsurTech Services and Solutions and successes in future-proofing insurance enterprises here: Insurance Technology Services | Iris Software.

Contact

Our experts can help you find the right solutions to meet your needs.

Get in touch

Application Managed Services (AMS) for enterprise applications

These services encompass development, maintenance, and management, ensuring applications align with business objectives and deliver measurable value.

Application Managed Services (AMS) for enterprise applications

These services encompass development, maintenance, and management, ensuring applications align with business objectives and deliver measurable value.

Service Offerings

Portfolio Assessment and Consulting

Cloud migration roadmap and implementation plan to meet business goals

Cloud Re-platforming

Cloud-ready applications built on a modern tech stack that are resilient and scalable with reduced TCO

Cloud Re-factoring

Applications developed on cloud-friendly architectures with new features to meet growth objectives

Cloud-native Development (Rewrite)

Born-in-the-cloud application developed with reimagined user experience and 12-factor design principles

Service Offerings

Next-Gen Support

Leveraging Automation and Generative AI to minimize human intervention, enhance service reliability, and deliver context-aware solutions in application support. This streamlines repetitive tasks, accelerates issue resolution, and enables proactive problem prevention, driving efficiency and improved user satisfaction

Value We Provide

Continuous Improvement

We drive continuous improvement across the development lifecycle by leveraging optimization and automation to enhance efficiency, reduce operational costs, and improve application performance.

24/7 Support

Our multi-geo teams provide round-the-clock support, ensuring consistent service coverage across time zones. This model enhances responsiveness, minimizes downtime, and delivers seamless operations globally.

Talent

We offer skilled resources and expertise to effectively support your critical business applications/systems, enabling you to focus on innovation without getting bogged down in routine tasks.

Client Success Stories

Tools & Technologies

How Gen AI can enhance software engineering

Contact Us

Thank you for getting in touch

We appreciate you contacting us. We will get back in touch with you soon.

Have a great day.

Meet our team at the Payments Canada Summit 2025

The 2025 Payments Canada Summit, themed Innovate-Collaborate-Transform, is May 6 - 8 at the Automotive Building in Toronto, Ontario. This forum is considered Canada’s premier payments event, bringing together more than 1,900 participants to discuss innovation, challenges and opportunities in this dynamic global industry. Financial institutions, payments and technology service providers, retailers, monetary and regulatory professionals will connect, learn, and share ideas through presentation and networking sessions. The key topics relate to improving outcomes in digital payments, consumer experiences, risk and fraud mitigation, cross-border and other payments policies.

Connect with three of our banking and financial services (BFS) technology experts at the Summit to discuss digital transformation and payment system modernization: Subramanian Viswanathan, Associate Vice President, Mehul Shah, Associate Director, and Suneela Katikala, Senior Client Partner.

The advanced technologies that Iris delivers – across AI / Generative AI, Application Development, Automation, Cloud, DevOps, Data Science, Enterprise Analytics, and Integrations - are driving innovations in the payments sector every day. Our experienced global team helps financial institutions and payments providers enhance operations, security, scalability, cost-efficiency, and compliance in the myriad platforms, processes and systems supporting their domestic and international payments transactions. Iris has served BFS clients for more than 30 years and was named a Leader in BFS IT Services by Everest Group in its PEAK Matrix® Assessment 2025. Iris is also PCIDSS 4.0-certified to ensure robust cyber security and compliance for our clients involved in payment card processing or that store, process, or transmit cardholder data and/or sensitive authentication data.

Contact our team at the Summit or anytime at Iris Software Banking and Financial Services to learn more about our future-ready technology solutions. You can also read our Perspective Papers for insights on Real-world Asset Tokenization, leveraging Generative AI for Asset Tokenization and the state of Central Bank Digital Currency.

Contact

Our experts can help you find the right solutions to meet your needs.

Get in touchJoin us at the InsurTech Hartford Symposium

The InsurTech Hartford Symposium 2025 is April 29 and 30 at the Connecticut Convention Center. The focus of the forum’s numerous speakers, sessions, and attendees is on innovation, and how it’s driving digital transformation and growth in the insurance industry.

As most insurers’ strategic objectives include the improvement of product line innovation, personalized customer experience, operational efficiency and security, and compliance and risk management, partnering with experienced technology service providers like Iris Software is critical to their business success.

At the Symposium, insurance leaders and innovators can meet Venkat Laksh, Senior Client Partner and seasoned InsurTech pro, to learn how insurers are applying our advanced software engineering solutions, in Application Development, Automation, AI / Generative AI, Data Science, Enterprise Analytics, and Cloud, to modernize tech infrastructure, optimize business competencies and secure their digital futures.

Connect with Venkat at the InsurTech Hartford Symposium 2025 for beneficial conversation on the latest InsurTech innovations and trends. You can also contact Venkat and our InsureTech team and get more information about our InsurTech solutions and services here: Insurance Technology Services | Iris Software.

Contact

Our experts can help you find the right solutions to meet your needs.

Get in touchEverest Group Names Iris Software a Leader in BFS IT Services

Iris is proud to be recognized as a Leader in the inaugural Banking and Financial Services (BFS) IT Services Specialists PEAK Matrix® Assessment 2025 by Everest Group. This assessment is the first-ever for this category, and for Iris!

We were evaluated along with 29 other BFS IT service providers on our market presence, value delivery, strategic vision, innovation, technical capabilities, functional knowledge, and buyer references.

Pranati Dave, Practice Director, Everest Group, stated, “Iris Software’s expertise in capital markets and risk and compliance transformation, combined with a skilled talent pool and a strong reputation for execution, continues to drive its success with top-tier financial institutions. Clients have consistently recognized Iris for its low attrition, engineering rigor, and collaborative delivery model. Its investments in ML Ops, AI/ML, and modernization of cloud-based data and risk platforms have helped Iris earn a Leader recognition on Everest Group’s inaugural Banking and Financial Services (BFS) IT Services Specialists PEAK Matrix® Assessment 2025.”

Sunil Puri, President, Iris Software, stated, “We are honored to be named a Leader in BFS ITS by Everest Group. During our more than 30 years of growth in the BFS and other sectors, including Capital Markets & Investment Banking; Brokerage, Wealth & Asset Management; Commercial & Corporate Banking; Risk & Compliance; Retail Banking & Payments; Anti-Money Laundering & Know-Your-Customer; Insurance; Manufacturing; Logistics; Life Sciences and Professional Services, we have been a long-term partner in our clients' transformation journeys - helping to build, integrate and modernize complex platforms, systems, and applications.”

When IT matters most, clients call upon Iris to provide mission-critical software engineering and advanced application development services across AI/Generative AI, Automation, Cloud, Data & Analytics, Integrations, DevOps and Quality Engineering.

We remain steadfast in our vision of being our clients’ most trusted technology partner and thank our associates for their dedication and innovation.

Learn more about our BFS services, value proposition and client success stories and contact our team today.

Disclaimer

Licensed extracts taken from Everest Group’s PEAK Matrix® Reports, may be used by licensed third parties for use in their own marketing and promotional activities and collateral. Selected extracts from Everest Group’s PEAK Matrix® reports do not necessarily provide the full context of our research and analysis. All research and analysis conducted by Everest Group’s analysts and included in Everest Group’s PEAK Matrix® reports is independent and no organization has paid a fee to be featured or to influence their ranking. To access the complete research and to learn more about our methodology, please visit Everest Group PEAK Matrix® Reports.

Contact

Our experts can help you find the right solutions to meet your needs.

Get in touchIndustries

Company

Bring the future into focus.