How to win in the API economy with API Developer Portals

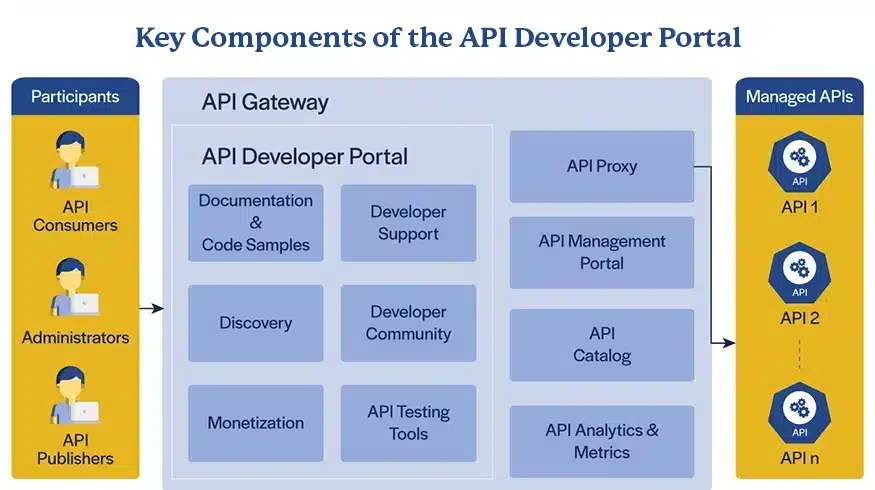

In an increasingly API-driven economy, an all-inclusive API Developer Portal can differentiate an enterprise from its competitors.

The evolution and adoption of enterprise digital transformation have made APIs critical for integration within and across enterprises as well as for product/service innovation. As APIs grow in scale and complexity, establishing a developer portal would significantly ease the process of their roll-out and adoption. This perspective paper explores the significance of an API Developer Portal in the modern digital landscape driving the API economy.

A Developer Portal makes it easier to understand APIs, reduces integration time, and supports developers in training and resolving API-related issues. This provides significant business value by improving agility and enhancing customer experience. With the help of a Portal, enterprises can efficiently publish and consume APIs and enable their integration with incremental API versions. This will ensure benefit from all digital investments.

In an increasingly API-driven economy, an all-inclusive API Developer Portal can differentiate an enterprise from its competitors, help build trust with partners, and achieve long-term success. Depending on the API platforms being used, enterprises could adopt a built-in platform or develop a custom one. Developing a custom API Portal would be easy at the start. However, developing enhanced features would entail a significant investment of time and resources. Hence, to make the right decisions and succeed in the broader API implementation/integration journey, a well-thought-out approach is necessary.

To learn more about the key drivers, components and features, implementation options and potential benefits of API Developer Portals, download the perspective paper here.

Contact

Our experts can help you find the right solutions to meet your needs.

Get in touch