Client

A leading home insurance enterprise

Goal

Expedite go-to-market strategy by integrating the policy admin system with multiple external services while ensuring scalability, security, and compatibility

Tools and Technologies

Unqork, API gateways, REST APIs, SOAP APIs

Business Challenge

The client sought to expedite its go-to-market strategy by integrating its Policy Admin system with multiple external services.

The objective was to swiftly implement these integrations while maintaining scalability, security, and compatibility with external services, thereby accelerating the organization's time-to-market for new products.

Solution

- Efficiently created APIs and seamlessly integrated them with the existing low-code/no-code enterprise application

- Utilizing pre-built and pre-exposed API gateways, the team developed highly functional and adaptable APIs without manual coding

- Unqork facilitated the creation of both REST and SOAP APIs, seamlessly integrating with external APIs, ensuring optimal data access and distribution capabilities

Outcomes

- 40% faster time-to-market, enabling quicker product launches

- 25% increase in customer satisfaction due to improved system flexibility

- Enhanced scalability and security with seamless API integrations

Our experts can help you find the right solutions to meet your needs.

LIMRA 2025 Workplace Benefits Conference

The LIMRA (Life Insurance Marketing and Research Association) 2025 Workplace Benefits Conference takes place April 23-25, 2025, at the Encore Boston Harbor in Boston, MA. With this year’s theme, Pathways to Growth, industry leaders and participants will examine the business and technology trends affecting the North American workplace benefits market. Speakers and attendees, primarily carriers and brokers of life, health, and related insurance coverage, and providers of employee benefits and technology solutions, will explore strategies for growth while addressing shifting consumer needs, the latest digital tools and technologies, and an increasing focus on innovation, outcomes and collaboration.

Venkat Laksh, Iris’ Global Lead – Insurance, and Senior Client Partner, will attend this forum. Iris provides leading insurance companies with advanced InsurTech services and solutions, including Software and Quality Engineering, AI/ML/Generative AI, Application Modernization, Automation, Cloud, Data Science, Enterprise Analytics and Integrations. These companies are applying next-generation, emerging technology through Iris to ensure their enterprises are future-ready, scalable, secure, cost-efficient, and compliant.

Talk about your digital priorities with Venkat at the LIMRA 2025 Workplace Benefits Conference and connect with our InsurTech team anytime to advance your digital transformation goals: Insurance Technology Services | Iris Software.

Contact

Our experts can help you find the right solutions to meet your needs.

Get in touchEnterprise-Grade DevOps for Scalable Blockchain DLT

Client

A leading provider of real-world asset (RWA) tokenization, digital currency, and interoperability solutions to the world’s largest financial players

Goal

To optimize blockchain DLT platforms for scalability, resilience, and seamless operations through enterprise-grade DevOps and SRE practices

Tools and Technologies

R3 Corda 5, Azure, AWS, G42, Docker, Kubernetes, Helm Charts, Terraform, Ansible, GitHub Actions, Azure DevOps, Jenkins, Prometheus, Grafana, Slack Integration

Business Challenge

Complex Multi-Node Deployments require a mechanism to upgrade CorDapps, notaries, and workers across network participants without downtime or compatibility issues. Meanwhile, security and compliance risks demand strict access controls, network segmentation, and security hardening to protect Corda nodes and ledger operations.

For infrastructure scalability and automation, an efficient approach was required for onboarding new participants and managing network topology across cloud environments. The lack of a Real-Time Monitoring system necessitated the detection of transaction failures, tracking node health, and providing proactive alerts.

To address security vulnerabilities, continuous scanning and security enforcement across CorDapps, containerized nodes, and CI/CD pipelines were required.

Solution

- Enabled zero-downtime CorDapp deployments with automated rollback and stateful upgrades, ensuring stability and ledger integrity

- Secured Corda nodes with RBAC, network segmentation, and security hardening, while optimizing autoscaling for dynamic ledger workloads

- Automated Corda network topology and participant onboarding using modular Terraform & Ansible configurations, ensuring scalability and repeatability

- Implemented real-time monitoring with Prometheus & Grafana, with Slack-based alerts for transaction failures and node health anomalies

- Ensured high availability and auto-healing for Corda network nodes and ledger operations

- Integrated DevSecOps with automated vulnerability scanning for CorDapps, containerized nodes, and CI/CD pipelines

Outcomes

- Accelerated blockchain deployment cycles through CI/CD automation increased deployment frequency by 40% and reduced failures by 60%

- Optimized kubernetes workloads for Corda 5 through efficient resource management resulted in 15% cost savings and improved ledger performance

- Scalable & secure blockchain infrastructure reduced manual intervention by 30%, enabling seamless scaling of Corda network participants

- Proactive incident management for DLT networks through real-time monitoring cut response time by 50% for blockchain issues, ensuring high availability

- Automated workflows accelerated development cycles by 25%, enhancing collaboration across blockchain and DevOps teams

Our experts can help you find the right solutions to meet your needs.

Enhanced executive dashboard for streamlined reporting

Client

A leading specialty property & casualty insurer

Goal

Redesign the existing executive dashboard to improve user experience, streamline access to reports, and reduce manual efforts in onboarding new users and reports

Tools and Technologies

MS Azure, MS SQL, .NET Core

Business Challenge

The client aimed to revamp its executive dashboard to address usability concerns and enhance functionality. Users found the system difficult to navigate due to inconsistent flow and poor design. Essential information was not easily accessible in one place, leading to inefficiencies.

Additionally, onboarding a new report or user required extensive manual effort, including database updates, script creation, and multiple service tickets, resulting in turnaround times of 1-2 days.

Solution

As a strategic solution partner, Iris reimagined the executive dashboard with a focus on user experience and operational efficiency. Key enhancements included:

- Intuitive design improvements for seamless navigation and accessibility

- Expanded functionalities such as search, sorting, filtering, favorites, most reviewed, access requests, user management, notifications, and audit trail

- Development of a centralized reporting hub that provides a single access point for all reports with a mobile-friendly interface

- Deployment on Azure for seamless accessibility and performance optimization

Outcomes

- Improved customer experience and satisfaction through a user-friendly interface

- Onboarding time for new users and reports reduced to 30 minutes

- Simplified report access with SSO integration and minimal clicks required to retrieve information

- Easier discovery and navigation of reports, with enhanced filtering and search capabilities

- Personalized user views with notifications for schedule delays or report errors

- Introduction of an admin section enabling UI-based user creation, notifications, and report access management

Our experts can help you find the right solutions to meet your needs.

Leveraging Generative AI for asset tokenization

Enhance efficiency, security, and user experience by leveraging Generative AI in DLT-based asset tokenization.

Asset tokenization is converting ownership rights of an asset that has traditionally resided within legacy or traditional systems into a digital token on a Distributed Ledger Technology (DLT) platform. This transformation enables numerous benefits, including fractional ownership, 24/7 availability, easier transferability, and enhanced liquidity.

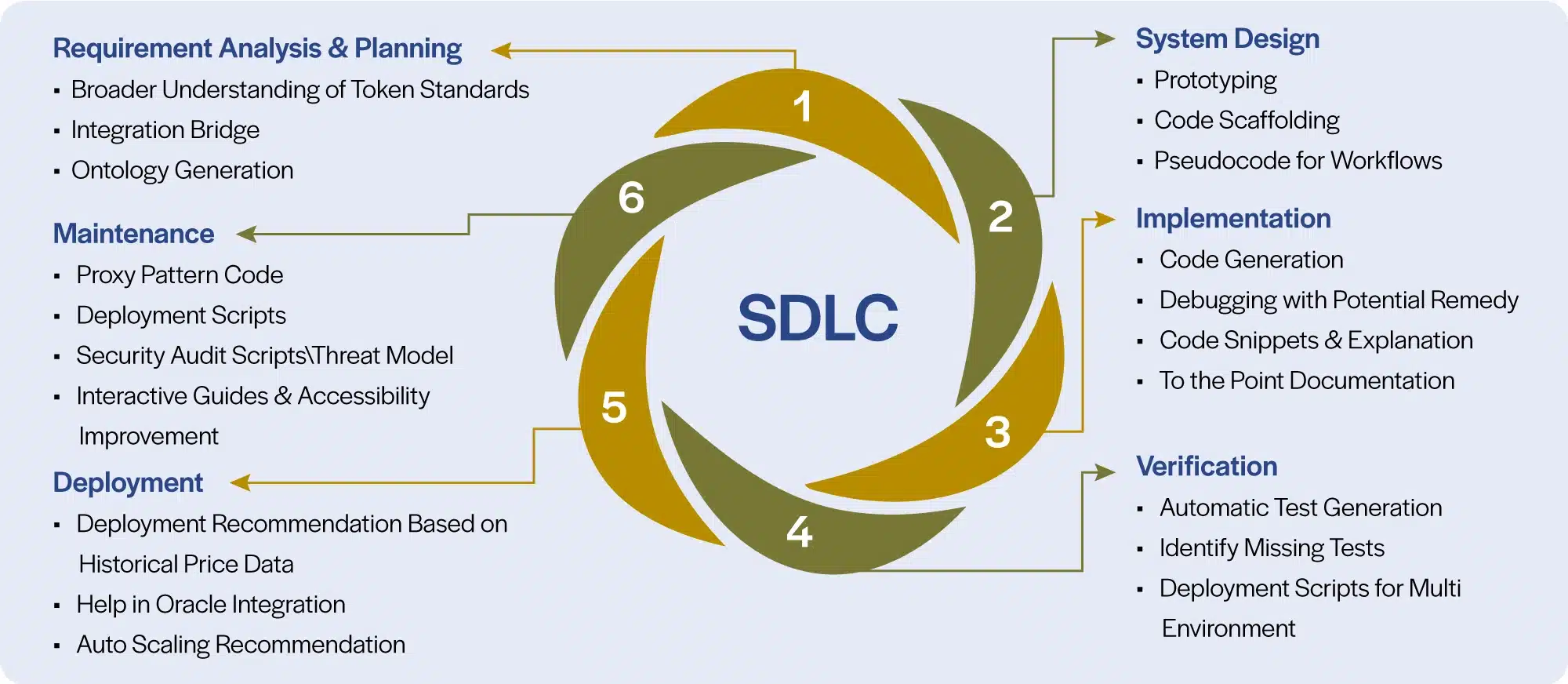

Developing and deploying a comprehensive asset tokenization system on DLT is a full-scale software development endeavor encompassing all SDLC phases. Every phase presents challenges, including technology complexities, evolving business use cases, non-standardization, scarcity of resources, and reluctance to adopt.

As asset tokenization emerges as an essential solution for financial institutions, the integration of Gen AI amplifies customer value. Institutions can achieve unprecedented efficiency, accuracy, and innovation by leveraging Gen AI's capabilities throughout the asset tokenization process.

Gen AI is set to play a pivotal role in improving asset tokenization by contributing to the different phases of its implementation. Gen AI can assist both in the implementation phase and beforehand, as it can help produce synthetic financial data that closely resembles real market conditions conduct stress tests and other simulations, helping to strengthen the platform.

Read our Perspective Paper for more insights into the key phases, benefits and the road map to asset tokenization.

Contact

Our experts can help you find the right solutions to meet your needs.

Get in touchSeamless self-service portal integration with FINEOS

Client

A leading insurance provider specializing in absence management solutions

Goal

Migrate data and services from a legacy platform to FINEOS while ensuring a seamless user experience and improved system reliability

Tools and Technologies

Spring Boot, APIGEE, FINEOS AdminSuite, Splunk

Business Challenge

The client aimed to migrate data and services from its legacy Group Benefits Platform Transformation (GPT) system to FINEOS AdminSuite while maintaining a consistent user interface, improving data accuracy, and ensuring minimal downtime.

The system also required enhanced automation and monitoring to streamline absence creation for agents and employees.

Solution

- Developed APIs to integrate the legacy system with FINEOS AdminSuite for seamless absence management

- Designed an interactive self-service portal, allowing users to efficiently manage absences

- Used Spring Boot for service development and APIGEE for API integration

- Implemented Splunk for real-time monitoring and log analysis to enhance system reliability

Outcomes

- Increased system scalability, reliability, and data accuracy

- Enhanced user experience with a modern, intuitive interface

- Reduced time taken to create absences, improving efficiency for agents and employees

- Faster development cycles and better monitoring, ensuring proactive issue resolution

Our experts can help you find the right solutions to meet your needs.

Streamlining policy administration with low-code development

Client

A leading home insurance enterprise specializing in policy administration solutions

Goal

Develop policy administration applications for partner insurance carriers with seamless third-party and home-grown app integration

Tools and Technologies

Low-Code/No-Code Platform, AWS, Agile Development, Containerization

Business Challenge

The client needed a scalable policy administration solution to support insurance carriers, integrate third-party applications, and enhance policy issuance and settlement.

The goal was to accelerate the go-to-market process for partners and agents while delivering premier services for competitive pricing and superior policy coverage.

Solution

- Designed and developed a policy administration platform using a Low-Code/No-Code application development platform on AWS

- Built three Agile Pod-based teams, each containing five members for rapid iteration and development

- Leveraged containerized development, ensuring each service had its own lifecycle for enhanced flexibility and scalability

- Established weekly release plans with feature flags to enable controlled functionality deployment in production once the business was ready to adopt

Outcomes

- 200% growth in policy issuance

- Faster onboarding for agencies and agents

- Improved efficiency and scalability in policy administration

- Accelerated go-to-market for insurance partners and agents

Our experts can help you find the right solutions to meet your needs.

InsurTech NY 2025 Spring Conference

The annual InsurTech NY Spring Conference is April 2-3, 2025, at Chelsea Piers in New York City. The theme of this year’s forum is InsurTech is the New R&D. The event brings together 900+ leaders and innovators in the industry, including carriers and brokers of life, health and disability, property and casualty (P&C), and specialty insurance; investors; and insurtech service providers like Iris Software. Each year, speakers and attendees focus on the technology and business management solutions that will enhance the operations, customer experience, and revenue streams of insurers.

Meet the leaders of our global InsurTech team - Ravi Chodagam, Vice President, Venkat Laksh and Abhineet Jha, Senior Client Partners - at InsurTech NY’s 2025 Spring Conference. As an integral and long-time technology partner to many top life, P&C, and specialty insurers, Iris has vast experience implementing agile and advanced technology and data solutions that ensure clients stay competitive and ahead of rapidly evolving trends in the dynamic insurance industry.

Discuss your tech priorities with Ravi, Venkat and Abhineet at the Spring Conference, or anytime, and learn how leading insurers apply our solutions in AI and Generative AI, Application Modernization, Automation, Cloud, and Data Science & Analytics to ensure their enterprises are future-ready, scalable, secure, cost-efficient, and compliant. You can also contact the team and learn more about our InsurTech Services here: Insurance Technology Services | Iris Software.

Contact

Our experts can help you find the right solutions to meet your needs.

Get in touchGen AI platform offers future-ready capabilities

BANKING & FINANCIAL SERVICES

Gen AI platform offers future-ready capabilities

Client

A leading North American bank

Goal

Provide a wide range of AI capabilities for various risk and business teams and avoid building fragmented, outdated systems

Tools and Technologies

Amazon Bedrock and Titan V2, pgvector, Faiss, OpenSearch, Llama 7B, Claude Sonnet 3.5 and 3.7

Business Challenge

The Enterprise Risk function at a leading North American bank initiated a Generative AI (Gen AI) solution to offer a wide range of AI capabilities, including document intelligence, summarization, generation, translation, and more.

As the project evolved through proofs of concept and pilots, a key challenge emerged: the risk of creating a fragmented ecosystem with an overwhelming array of unmanageable bespoke solutions, model integrations, and reliance on potentially outdated models and libraries.

Solution

Based on prior engagements across clients, our team delivered thought leadership around how to develop and deliver capabilities using a platform approach. We also set up a Minimum Viable Product Team to iterate on new problem areas and solution approaches. Platform development includes generalized capabilities for:

- Setting up document ingestion pipelines, with choice of parsing approaches, embedding models and vector index stores

- A factory model along with configurations for integrating new parsers, embedding models, LLM interfaces etc., to quickly bring new capabilities to the platform

- User management, SSO integration, entitlements management

- API integration to bring in information/ data from internal and external sources

- Platform support of pgvector, Faiss, OpenSearch, Amazon Titan V2, Llama 7B, Claude Sonnet 3.5, and 3.7, etc.

- Intuitive chat interface for AI Masters - designated business users trained in Prompt Engineering and other techniques to assemble new AI/Gen AI capabilities for users through configuration - and end users

Outcomes

- A future-ready Gen AI platform that can easily incorporate new capabilities and updates

- Multiple specific capabilities, called skills, for use by various risk teams and business users

- A forward-looking roadmap, including ability to compose more complex capabilities using atomic capabilities

Our experts can help you find the right solutions to meet your needs.

Data migration to cloud expedites credit risk functions

Client

A leading North American bank

Goal

Migrate credit risk data and SAS-based analytics models from on-premises data warehouse to AWS to enhance functionality

Tools and Technologies

AWS Glue, Redshift, DataSync, Athena, CloudWatch, SageMaker; Apache Airflow; Delta Lake; Power BI

Business Challenge

The credit risk unit of a major bank aimed to migrate SAS-based analytics models containing data for financial forecasting and sensitivity analysis to Amazon SageMaker.

This was to leverage benefits such as enhanced scalability, improved maintenance for MLOps engineers, and better developer experience. It also sought to migrate credit risk data from a Netezza-based on-premises data warehouse to AWS, utilizing a data lake on AWS S3 and a data warehouse on Redshift to support model migration.

Solution

- Decoupled data workload processing from relational systems using the phased approach with a focus on historical migration, transformational complexities, data volumes, and ingestion frequencies of the incremental loads

- Developed a flexible ETL framework using DataSync for extracting data to AWS as flat files from Netezza

- Transformed data in S3 layers using Glue ETL and moved it to the Redshift data warehouse

- Enabled Glue integration with Delta Lake for incremental data workloads

- Built ETL workflows using Step Functions during orchestration and concurrent runs of the workflow; orchestrated the concurrent runs of workflows using Apache Airflow

- Architected data shift from Netezza to AWS, leveraging a flexible ETL framework

Outcomes

- Enhanced financial forecasting and sensitivity analysis operations with analytical models and data migrated to the AWS public cloud

- Expedited time-to-market catering to client’s downstream consumption needs through Power BI and Amazon SageMaker

Our experts can help you find the right solutions to meet your needs.

Industries

Company

Bring the future into focus.